Concurrency and parallelism have always been a mystery to me as a developer. I used to avoid them, but recently, I decided to face my fears and learn the topic. My recent growing interest in distributed systems partly motivated this decision.

I’m a strong believer that the best way to learn anything in CS is to dive in and start building and implementing. It might not be the fastest way to learn, but it is certainly an efficient method to understand different concepts well. So, I decided to build a concurrent web crawler using Go, and hence, this tutorial.

Table of contents

Open Table of contents

What are we building and why ?

In this tutorial, we are building a web crawler that can use multiple threads to fetch and crawl several URLs efficiently. I think this is a perfect project to learn concurrency. Besides, it is quite handy, you can use it to crawl websites for news, coupons, prices, etc.

Concurrency is an important aspect of web crawling. Consider a website with 5,000 URLs: If it takes 500 milliseconds to fetch a web page, scrape it, and store the results, a serial (synchronous) web crawler that processes each URL sequentially would require approximately 2,500 seconds, or about 40 minutes, to complete the task. This approach, using only a single thread to handle all 5,000 crawling operations, is inefficient and underutilizes the capabilities of modern machines, which can manage thousands of threads simultaneously.

As for why we are using Go, I believe Go is a great language for concurrent programming. Historically, Go implements some core ideas first published by the British computer scientist and Turing Award laureate, Tony Hoare, in his seminal work “Communicating Sequential Processes”. Mechanisms like goroutines, channels, and other concepts in Go are inspired by this paper.

The difference between concurrency and parallelism

It is quite common to confuse concurrency and parallelism, as they sound very similar. If we look at an English dictionary, the term “concurrence” means the co-existence of two or more things, while “parallel” is two or more events happening simultaneously. The definition is very similar, and the confusion is quite understandable.

One of the best distinctions between concurrency and parallelism comes from Rob Pike, the founder of the Go language, in his brilliant talk “Concurrency is not Parallelism”, a talk that I highly recommend watching, it offers some great insights about concurrency and parallelism with Go.

He presents concurrency as a programming model. Concurrent code allows for different independent processes (functions, features, etc.) to co-exist. It’s a way to structure code to deal with many things independently but not necessarily at the same time, as he puts it. Parallelism, on the other hand, is the act of running these processes at the same time, either using a multicore CPU or several machines.

Programs can be built in a concurrent way without being executed in parallel. It is more of a paradigm. You have to think about the different parts of your code and how they can co-exist independently so that no part blocks the other. Good concurrent code will indeed run faster once executed on a parallel system because it has been designed for that.

A brief overview of processes and threads

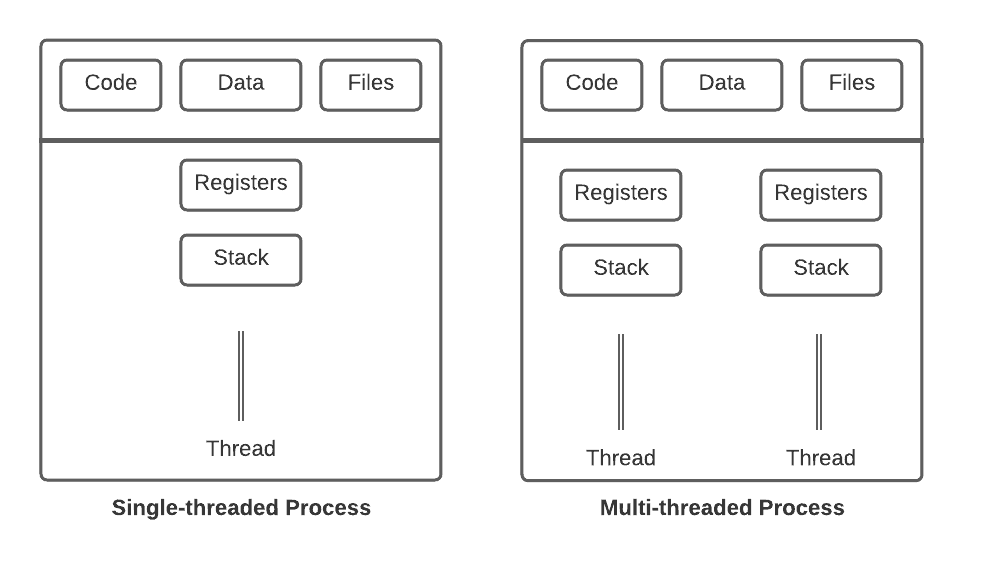

A great explanation of processes and threads can be found in the book “Distributed Systems” by van Steen and Tanenbaum. They define a process as a logical program with its own memory space and context, known as process context, which holds a program counter and some other values. Although processes are all executed on the same CPU, the OS ensures that processes are well separated to avoid malicious attacks from one program to another.

Threads are smaller logical units that run a piece of code, they are executed inside processes and they share all the same address space within the same process. The thread context is composed mainly of the process context along with some additional information to manage the thread. Therefore, when we say multi-threaded programming, we are building a program that rely on several threads to operate efficiently.

In the case of the web crawler we are building, you can think of crawling each URL as an independent task that can run in its thread. Using one thread per crawling operation allows us to crawl a large set of pages using multiple threads, which can run in parallel on a multi-core machine.

Baby steps: a sequential web crawler

We will first start with a simple sequential web crawler to get the basic ideas first, this crawler will simply send an HTTP request to the base url (we will use Golang homepage as an example throughout this project). The web crawler will perform the following steps:

-

Fetch the initial base page

-

Extract the title and the list of URLs

-

Add each visited URL to a map to keep track of the visited URLs

-

Have a counter that is incremented every time a URL has been crawled, we compare this counter to a threshold constant

maxLinks, this guarantees that the program will not run forever.

Talk is cheap, here is the code for main.go:

package main

import (

"fmt"

"annis/webcrawler/crawler"

)

const maxLinks = 20

func crawl(link string, visitedUrls map[string]bool, counter *int) []string {

if *counter >= maxLinks {

return nil

}

basePage, err := crawler.FetchUrl(link)

if err != nil {

fmt.Println("Error: ", err)

return nil

}

visitedUrls[link] = true

fmt.Println("Title: ", basePage.Title)

var newUrls []string

for _, url := range basePage.UrlList {

if *counter >= maxLinks {

break

}

fullUrl, err := crawler.ResolveURL(url, link)

if err != nil {

fmt.Println("Error: ", err)

continue

}

if !visitedUrls[fullUrl] {

*counter++

newUrls = append(newUrls, fullUrl)

}

}

return newUrls

}

func main() {

startUrl := "http://golang.org"

visitedUrls := make(map[string]bool)

counter := 0

toVisit := []string{startUrl}

for len(toVisit) > 0 && counter < maxLinks {

currentUrl := toVisit[0]

toVisit = toVisit[1:]

if visitedUrls[currentUrl] {

newUrls := crawl(currentUrl, visitedUrls, &counter)

toVisit = append(toVisit, newUrls...)

}

}

}Important Note: The package annis/webcrawler/crawler holds a set of helper function for sending HTTP requests, and extracting links using the HTML <a> tag as well as the page title. It also has a ResolveUrl function which validates URLs: We only crawl URLs that are part of the Golang website to avoid running a crawler that would start crawling hundrends of websites… we have no plans of building another Google 😅.

The code for this package is a bit too long to include in this blog post, but you can grab it crawler.gofrom the project repository on Github.

In this web crawler, we start by defining a few constants and variables. The maxLinks constant sets a limit on how many web pages we want to visit, which helps keep our program from running indefinitely as previously explained. We also create a map called visitedUrls to keep track of the URLs we’ve already visited, ensuring we don’t visit the same page multiple times. The counter variable keeps count of how many URLs we’ve processed so far.

The main logic of our crawler is in the crawl function. This function takes a URL, fetches the content of the page, and prints the title. It also looks for other URLs on the page and adds them to a list of URLs to visit next. Before adding a URL, it makes sure that the counter hasn’t exceeded the maxLinks limit and that the URL hasn’t already been visited. This prevents our crawler from getting stuck on a single page or visiting too many pages.

The main function is where we kick off the crawling process. We start with a single URL (startUrl), which is added to a list called toVisit. This list keeps track of the pages we need to crawl next. We use a simple loop to process each URL in the toVisit list. For each URL, we call the crawl function, which fetches the page and gathers new URLs to visit. These new URLs are then added to the toVisit list, and the process repeats until we’ve visited the maximum number of pages.

As you can see, this crawler is sequential, it has to wait for each URL to finish processing which is quite inefficient, once a request has been sent, we should proceed with the program execution, there is no need to wait for the response and do nothing. This is where concurrency comes in.

Making the crawler concurrent

We will now proceed to change the design of the web crawler into a concurrent one. The core function for fetching URLs is the crawl function which accepts a URL as an input and returns the list of different parsed URLs from the page.

Go allow us to run this function concurrently by preceding its call with the go keyword, this will create a goroutine which will run asynchronously. You can think of go-routines as spawning a thread, however, this is not completely true, go-routines are much lighter than threads, and your program can have 100k of go-routines without any problem. The main point to understand is that the moment you call a go routine it will start running independently of the main thread and thus the program execution will not block. In the code we just need to change the call inside the for loop:

for _, u := range basePage.UrlList {

fullUrl, err := crawler.ResolveURL(u, startUrl)

if err != nil {

fmt.Println("Error: ", err)

continue

}

if _, loaded := visitedUrls.LoadOrStore(fullUrl, true); !loaded {

counterLock.Lock()

if counter < maxLinks {

counter++

wg.Add(1)

go crawl(fullUrl, urlChan, resultChan, &wg, visitedUrls, &counter, &counterLock)

}

counterLock.Unlock()

}

}Channels for communication

A key idea in the CSP paper we mentioned earlier is using channels to communicate between different concurrent processes. Go has built-in support for channels, and as we will see, they are quite handy for sharing information between different goroutines.

We will use two channels, urlChan and resultChan, these channels will hold the different extracted URLs that need to be crawled, and the results from each crawling operation, respectively. To create both channels, let’s add these two lines at the beginning of the main function.

// In main()

urlChan := make(chan string)

resultChan := make(chan string)We can send URLs into the channel using the <- operator: urlChan <- url and we can read from it either using a for loop or urlChan -> , the same applies for the resultChan channel.

The new crawl method

With this in mind, let’s update the crawl function so that it takes both channels as parameters and use a wait group to wait for the crawl goroutine to finish.

func crawl(link string, urlList chan<- string, resultList chan<- string, wg *sync.WaitGroup, visitedUrls *sync.Map, counter *int, counterLock *sync.Mutex) {

defer wg.Done()

basePage, err := crawler.FetchUrl(link)

if err != nil {

fmt.Println("Error: ", err)

return

}

resultList <- basePage.Title

visitedUrls.Store(link, true)

for _, url := range basePage.UrlList {

counterLock.Lock()

if *counter < maxLinks {

*counter++

urlList <- url

}

counterLock.Unlock()

}

}Notice the use of the defer keyword: this defers the call to wg.Done() until the end of the crawl function. As you might have guessed, wg.Done() decrements the wait group counter by one, signalling that the goroutine has finished.

Whenever we crawl a page, we send the result to the resultChan channel and new URLs to the urlList channel. Additionally, we pass a reference to sync.Map, which refers to the visitedUrls map. This map holds a hash table with each URL as a key, indicating whether it has been crawled or not. We also pass a counter and a mutex (referred to as counterLock) by reference to the function. This will be explained in more detail in the next subsection.

counterLock. If we passed these by value, we would be working on copies of these objects, which could result in inconsistencies.Using a mutex for thread safety

As previously mentioned, we need a way to keep track of the number of crawled URLs so that our crawler doesn’t run indefinitely trying to crawl other websites. In the sequential version, this was as simple as defining an integer variable and incrementing it. However, in the concurrent version, it is not that straightforward. When launching multiple goroutines asynchronously, we cannot predict which one will finish first and increment the counter. This unpredictability causes the program to lose its deterministic nature. As a result, one goroutine might read an outdated value of the counter, leading to race conditions (which will be explained at the end of this article). To address this issue, we use a Mutex (mutual exclusion). Mutexes are synchronization primitives found in most concurrency packages across different programming languages (check out this funny analogy in SO).

In Go, we can use mutexes through the sync package. When a goroutine is modifying the counter, it performs three steps in this order:

-

Lock the counter: The goroutine locks the counter variable so that no other goroutines can modify it during this operation.

-

Increment the counter: The goroutine safely increments the counter in the current iteration.

-

Unlock the counter: The goroutine unlocks the counter, making it accessible to other goroutines.

By using mutexes, we ensure that the counter is incremented correctly and that only one goroutine can modify it at a time, thereby preventing race conditions and maintaining the integrity of our data. To use the mutex, we first need to create it:

// Mutex to protect the shared resource

var counterLock sync.MutexOnce this mutex is created (at the start of the main function for simplicity) we can call the counterLock.Lock() and the counterLock.Unlock() methods to lock and unlock the mutex flag respectively. It is important to make sure to release mutexes whenever we use them. We implement the locking and unlocking of mutexes in the for loop that iterates through the list of URLs.

for _, u := range basePage.UrlList {

fullUrl, err := crawler.ResolveURL(u, startUrl)

if err != nil {

fmt.Println("Error: ", err)

continue

}

if _, loaded := visitedUrls.LoadOrStore(fullUrl, true); !loaded {

counterLock.Lock()

if counter < maxLinks {

counter++

wg.Add(1)

go crawl(fullUrl, urlChan, resultChan, &wg, visitedUrls, &counter, &counterLock)

}

counterLock.Unlock()

}

}Closing channels properly

We finish this program by closing the channels that were created to hold the URLs and results. It’s important to close a channel only when all communication through that channel is over. To ensure this, we call wg.Wait(), which waits until all goroutines have finished (and thus the wait group counter is decremented to zero).

go func() {

wg.Wait()

close(resultChan)

}()

go func() {

wg.Wait()

close(urlChan)

}()

for r := range resultChan {

fmt.Println("Title: ", r)

}Notice that the call to wg.Wait() and close(channel)are in their own goroutine. This is because wg.Wait() is a blocking call. If we put it in the main thread, it would block the execution, and the for loop that prints the results wouldn’t be reached.

Where to go from here?

We now have a complete working concurrent crawler, you can refactor some of the code for a cleaner main function and you can expand on the features. This tutorial showcased how to use Go concurrency primitives to build a useful concurrent program. Running this crawler on a 6-core CPU is blazingly fast, as several go-routines are executed in parallel due to the concurrent design of the program.

Concurrency is a beautiful topic, but a hard one… I don’t want to scare you off this topic, but keep in mind that employing concurrency introduces a new set of complexities such as race conditions and deadlocks. These complexities can result in problems that are hard to debug. However, using the right tools and following best practices for concurrent programming (such as those by Microsoft, albeit it’s for C++) can help in building robust concurrent programs.

👉 Find the full code in Github repo: annis-souames/concurrent-crawler: A concurrent async web crawler in Go, with support of multi-threading. (github.com)